More is More

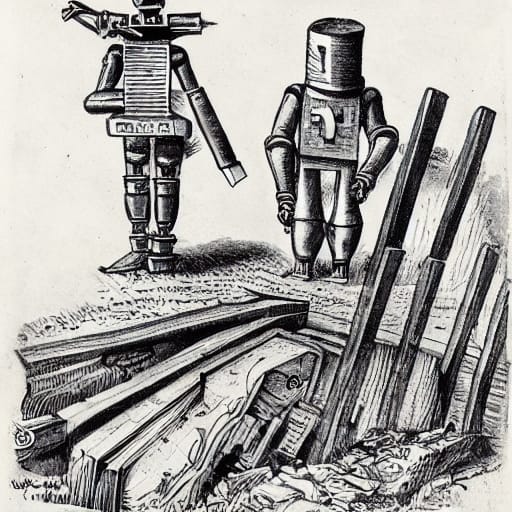

Technocapital makes its own grave diggers

Any discourse on AI that begins from the premise that generative AI represents a truly new innovation, even revolution, is utterly useless. This discourse is, much like the AI it talks about, a new ideological falsehood stacked upon a tower of previous falsehoods, half-truths about automation that go back at least to the turn of the 20th century.

The thing about the internet is it’s mechanical turks all the way down. The mechanical turk, for those not familiar, was a humanoid mechanical automaton from the 18th century that seemed to be able to play chess entirely on its own. The robot was seated at a chess table, and was able to defeat legions of chess players, and was believed a technological marvel before it was revealed a chess-prodigy dwarf sat underneath the table and played the game through a series of mirrors: classic flim-flam.

Every time you have “proved you’re human” by selecting crosswalks and motorcycles in a grid of photographs, you were building the dataset for automated cars. Despite the fact that billions of these procedures have been carried out by real human beings, autonomous cars still don’t work very well.

Some huge majority of the tasks that apps and web services seem to be doing automatically are in fact under constant supervision, enhancement and on many occasions simply being done by workers. This is literalized in the fact that Amazon has maintained an ultra-gig workforce for over a decade it unironically calls “Mechanical Turk”, where you can get hired for pennies to do incredibly repetitive tasks that tech companies pretend their programming automates.

Even the things that computers are really good at doing relatively automatically—scraping and amassing data; counting—have hit a significant ceiling in their usefulness. A well known problem in the field of data science is the incredible difficulty in turning the uncountable petabytes of information corporations amass into usable directives. Many people who are at the absolute highest positions of research in medicine, engineering, mathematics and technology spend their time devising algorithms to make sense of datasets that people can actually use.

This problem, of noise overwhelming data, is an unsurprising result of the market driving technological progress. For decades now the conmen of Northern California have convinced governments and corporations alike that their incredible capacity to produce and amass data is a valuable market proposition in and of itself. Mountains of almost useless data is something computers can produce cheaply and efficiently—particularly if you can provide a sufficiently seductive front-end product like cheap goods, social networking, fun video games or the like that gets millions of users to make it available for you, voluntarily—and an equally easy thing to sell to gullible clients swept up in the hype cycles and grift wars of the business world.

This is also true of the state, which, since the PATRIOT Act, has gathered a truly dystopian amount of data about every person in the country and on the planet, but seems to have made no great leap in preventing uprisings, repressing political enemies or maintaining control over their territory than they were previously. If the eye of sauron turns on you, it is quite capable of crushing you utterly: the state is capable of finding out horrifying levels of detail about its targets. But since 2011 the state and global capitalism have faced consistent, fierce and ongoing challenge from the streets at a level unseen since the long 60s. The surveillance state is a nightmare of repression and control, but it is not the decisive weapon in the class war its creators had hoped.

This is a classic capitalist error, confusing quantity for quality, produced by capitalists applying the logic of their most treasured commodity, money, to the social, informational, cultural and scientific worlds that they dominate. Because money is a universally exchangeable commodity, because it can substitute for all other commodities on the market, they apply the fundamental ideological equations of money (more = better; more creates more) at all other levels of social life.

Generative AI is a system that is designed to accelerate the process of turning already existing data into new, novel data. Existing images, words and ideas are scraped, blended, and repurposed into a series of images, words and ideas that can more or less pass the smell test of a glance. Because Silicon Valley operates on the (absolutely incorrect) presumption that more data is better, they have created a technology that is capable of creating genuinely novel data to add to the already too large hordes. In an era of image exhaustion, when memes are cliched shadows of themselves within days or even hours of their emergence, when the very idea of novelty feels quaint, in an era of end times and endlessly recycled fashions, ideas and stories, it is no wonder people are so excited by a technology that can consistently produce new images.

The problem is, of course, how wildly unreliable most of these images are. The system is not designed around the principle of better information, it is designed around the principle of more information. More information sells, and the market valuation of Open AI indicates it sells fucking well, despite the absence of demonstrable business applications of the technology—the images may be new, but the hype driven cash-burning failure to create actual profits is a tale as old as the Valley.

AI creates things human beings would never produce: in the realm of images, people who lack the visual design skills to create the images they can imagine (which, if you ask me, is a cool basic premise if it were to emerge in a different political economy) are able to get something passable. These images, however, will never be valuable, much like the NFTs they follow in the footsteps of, they lack the historically constructed systems of value production that the art world and the culture makers have created, and so they can only be used by managers to drive down the value of graphic design and commercial art labor.

In the realm of words it is even more stark: AI overwhelmingly creates gibberish a human being would actually never write, or passable paragraphs that are completely factually incorrect, dangerous misinformation. The companies that laid off their writing forces with big fanfare earlier in the year have been quietly rehiring writers to act as editors on AI generated texts.

Generative AI has been yet another Silicon Valley innovation which uses the whiz bang of the new to give bosses and government an excuse to drive down wages.

But it also has a strange knock-on effect. Having amassed so much “real” data from the human world, having pushed to the absolute limits of surveillance-driven data production, AI now produces novel data that is demonstrably, absurdly false. This will produce new sub markets in the economy for data cleaners, truth verifiers, and AI sniffers, who will always be one step behind the scammers and criminals using AI to peel money from the unsuspecting. But it also compounds the intense problems that state and corporation already face in actually being able to use the power that this data is supposed to give them.

When local police departments start integrating AI, in other words, how often will they end up doing SWAT raids on Comet Ping Pong? We already know that Q-Anon and conspiracy theory have deep penetration into law enforcement and government communities. Who knows what absolute unhinged nonsense AI algorithms are teaching the CIA and the FBI to believe at this very moment?

This complete break down of the value and quality of information—again, already an ongoing and serious problem merely accelerated by generative AI—is not in and of itself enough to destroy state power, since state power is largely a question of justification and spectacle. I’m no accelerationist, and the police have always been party to paranoia, misconception, grift and confusion—it doesn’t ultimately matter, because their techniques of overwhelming violence are sufficient to maintain levels of social peace acceptable to capital and state. And they will certainly try to use AI image production and deep fakes to frame and convict people.

But coupled with the collapse of their social legitimacy, generalized distrust in institutions, continually accelerating natural disaster and crisis, and perhaps a looming economic crash, generative AI is just one more tool that is going to weaken the state's narrative grasp over events. The conspiracist fash have so far been very good at fighting over this terrain, but as with so many objects of power in this twilight of empire, the capitalist machine keeps loosening the state's hold and leaving more and more up for grabs by movement and struggle.